Le blog de Scortex

Nouveautés et actualités de l'équipe Scortex

Articles

28 févr. 2025

Contrôle qualité par l'IA : Scortex et IAR Group unissent leurs forces pour transformer le contrôle qualité industriel grâce à l'IA

L'équipe Scortex

4 févr. 2025

7 missions clés d'un Responsable Qualité pour un contrôle qualité optimal

L'équipe Scortex

22 janv. 2025

Comment aller au-delà des machines de tri qualité grâce à l'IA

L'équipe Scortex

8 janv. 2025

Comment optimiser la qualité de production avec l'intelligence artificielle ?

L'équipe Scortex

18 déc. 2024

Qu'est-ce que la traçabilité en contrôle qualité ? Explications simples

L'équipe Scortex

10 déc. 2024

En quoi le contrôle qualité manuel et automatisé sont-ils complémentaires ?

L'équipe Scortex

21 nov. 2024

Interview avec Hugues Poiget, CEO de Scortex

L'équipe Scortex

12 nov. 2024

De la fatigue visuelle à l'excellence visuelle : comment une marque de cosmétiques de luxe a révolutionné le contrôle qualité de ses rouges à lèvres

L'équipe Scortex

23 oct. 2024

Comprendre la norme qualité dans l'industrie automobile

L'équipe Scortex

9 oct. 2024

5 façons de contrôler la qualité d'un produit

L'équipe Scortex

18 sept. 2024

Défauthèque qualité : à quoi ça sert ?

L'équipe Scortex

4 sept. 2024

Production cosmétique : défauts qualité visuels à éviter

L'équipe Scortex

28 août 2024

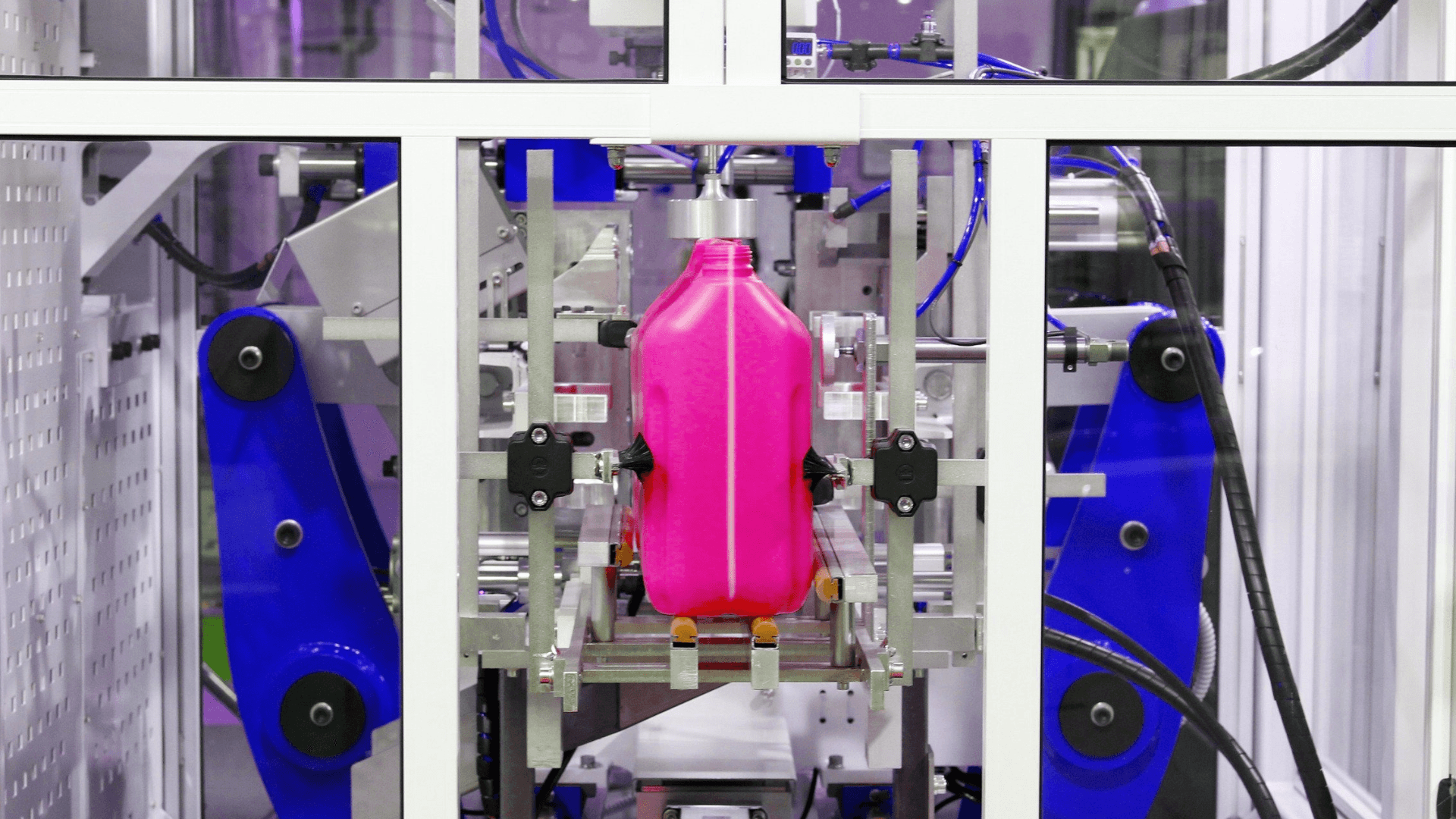

Injection plastique : définition et fonctionnement

L'équipe Scortex

9 août 2024

Les applications de l'intelligence artificielle en contrôle qualité

L'équipe Scortex

24 juil. 2024

L'industrie 4.0, qu'est-ce que c'est ? Explications

L'équipe Scortex

8 juil. 2024

Les avantages de l'inspection automatisée en contrôle qualité

L'équipe Scortex

26 juin 2024

L'Automatisation du contrôle qualité chez Toly grâce à la technologie Spark de Scortex

L'équipe Scortex

18 juin 2024

La vision industrielle : qu'est-ce que c'est ?

L'équipe Scortex

27 mai 2024

7 missions clés d'un(e) Responsable Amélioration Continue pour un contrôle qualité optimal

L'équipe Scortex

13 mai 2024

Inspection qualité des pièces brillantes : un défi impossible à relever ?

L'équipe Scortex

29 avr. 2024

Faut-il faire de la politique pour réussir un projet d’amélioration continue en qualité industrielle ?

L'équipe Scortex

17 avr. 2024

Optimisation de la traçabilité suite au contrôle qualité dans l'industrie moderne : un pilier de la fiabilité et de la transparence

L'équipe Scortex

29 mars 2024

Rendre les spécifications qualité faciles à utiliser pour les opérateurs d'inspection - Etude de cas : Inspection sur ligne d’assemblage automobile

L'équipe Scortex

18 mars 2024

L'inspection qualité: pour quoi faire?

L'équipe Scortex

4 janv. 2023

L'expérience utilisateur de votre solution de vision influence votre retour sur investissement

L’équipe Scortex

9 juin 2021

Conference EMVA X Scortex

L’équipe Scortex

15 oct. 2020

Scortex x LVMH innovation awards & La Maison des Startups

L’équipe Scortex

28 juil. 2020

Focus sur la qualité : votre plus grande opportunité d'améliorations opérationnelles et de réduction des coûts

L’équipe Scortex

4 déc. 2019

Scortex - Partneraire technologique de COGNITWIN

L’équipe Scortex

6 nov. 2019

Numérisation de l'inspection de la qualité pour l'industrie des plastiques

L’équipe Scortex

22 oct. 2019

Numérisation de l'Inspection de Qualité pour l'Industrie de la Forge et de la Fonderie

L’équipe Scortex

28 juil. 2019

Notre engagement en matière de sécurité

L’équipe Scortex

4 mars 2019

Une interview de Bill Black - Partie II

L’équipe Scortex

19 févr. 2019

Une interview de Bill Black - Partie I

L’équipe Scortex

22 janv. 2019

Scortex & SAP.IO Foundry Paris

L’équipe Scortex

8 oct. 2018

Scortex au Mondial.Tech - Paris Motor Show 2018

L’équipe Scortex

17 juil. 2018

Scortex sélectionné pour le programme Microsoft ScaleUp à Berlin.

L’équipe Scortex

13 avr. 2018

Scortex remporte le Grand Prix au Concours "Startup Automobile".

L’équipe Scortex

6 juil. 2017

Nous avons gagné le concours i-Lab

L’équipe Scortex

15 juin 2017

Annonce de notre Seed Funding

L’équipe Scortex

2 avr. 2016

Sélectionné pour l'incubateur Agoranov

L’équipe Scortex